Why focus on genomics?Īs fun as increasing context length benchmarks is, the potential impact of improving context lengths and expressivity in genomics is what’s compelling to us. At 1 million tokens, HyenaDNA is up to 500x longer than previous genomic FMs. For comparison, we also benchmark against a Transformer (up to 16k tokens).

We observe that as we increase context length, we're able to achieve better perplexity (improved next token accuracy). We pretrain a family of HyenaDNA models across model size and sequence length. But first, we want to share why we think genomics is an incredibly fascinating (and potentially underexplored) domain for deep learning research. We'll definitely dive into the experiments later, as well as the model details of HyenaDNA. We apply HyenaDNA on 28 genomic tasks (SotA on 23), using far fewer parameters than previous genomic models, with examples that can fit on colab. HyenaDNA trains up to 160x faster than Transformers with FlashAttention, uses a single-character tokenizer, and has global context at each layer. We show that as one increases context length, we can reach better performance measured in improved perplexity. Turns out, the answer is yes! We're *excited* to introduce HyenaDNA, a genomic foundation model pretrained on sequences of up to 1 million tokens long at single nucleotide resolution. We then apply the pretrained model on over 28 downstream tasks, as well as explore the first use of in-context learning in genomics. Our architecture is a simple stack of Hyena operators, with a single character tokenizer, and primary vocabulary of 4 (A, C, T, G). To pretrain HyenaDNA, we randomly sample a sequence from the Human Reference Genome and learn to predict the next nucleotide. (check out our other blogs on Hyena: blog 1, blog 2, blog 3)īut could Hyena work on genomics? What new capabilities could a long context genomic foundation model (FM) enable? Recently, Hyena, a convolutional LLM, showed it could match attention in quality with lower compute and time complexity, enabling much longer sequences in associative recall tasks.

To give a sense of the scale, the human genome (an entire set of DNA) has 3.2 billion nucleotides, which are the building blocks of DNA and can be seen as “characters” in the sequence. It includes the analysis of its function, structure, and evolution. In particular, the field with the longest sequences is arguably genomics, which is the study of all genetic material present in an organism.

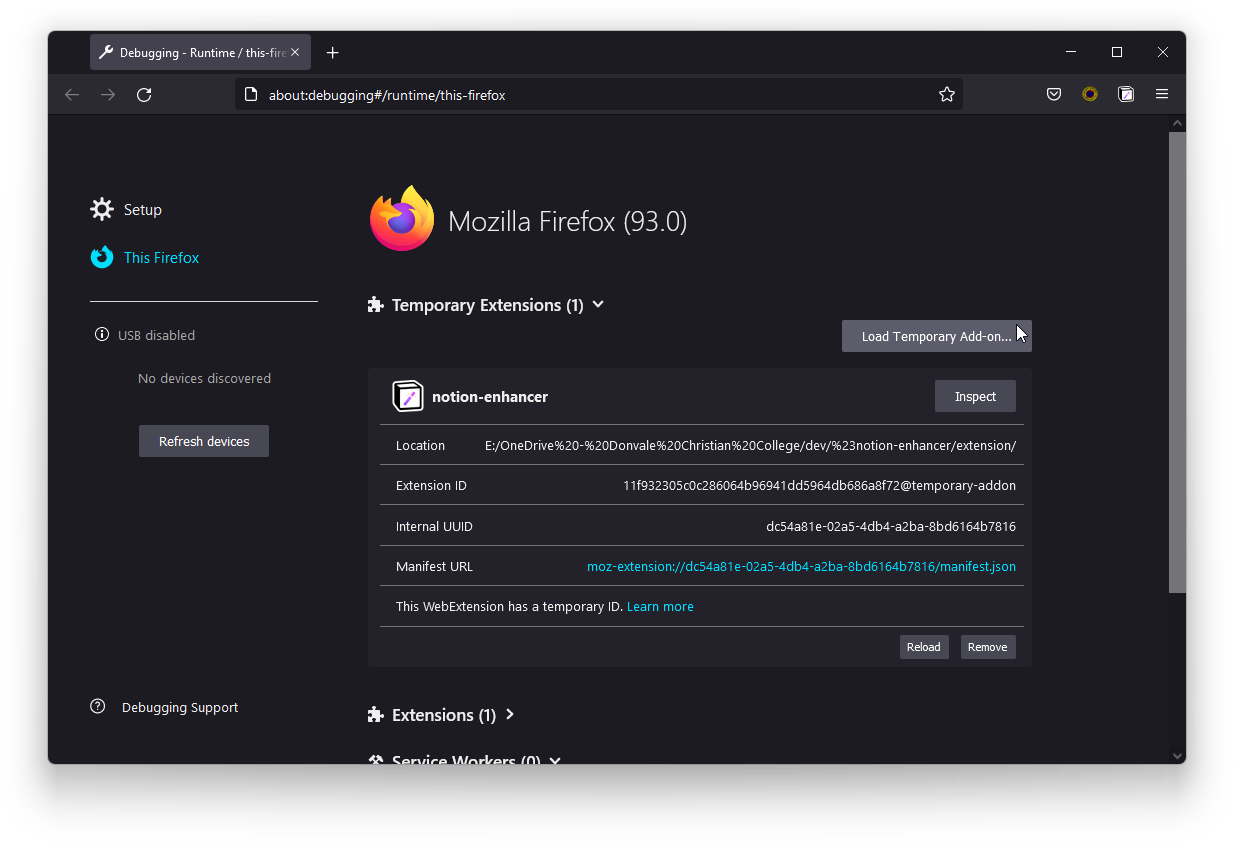

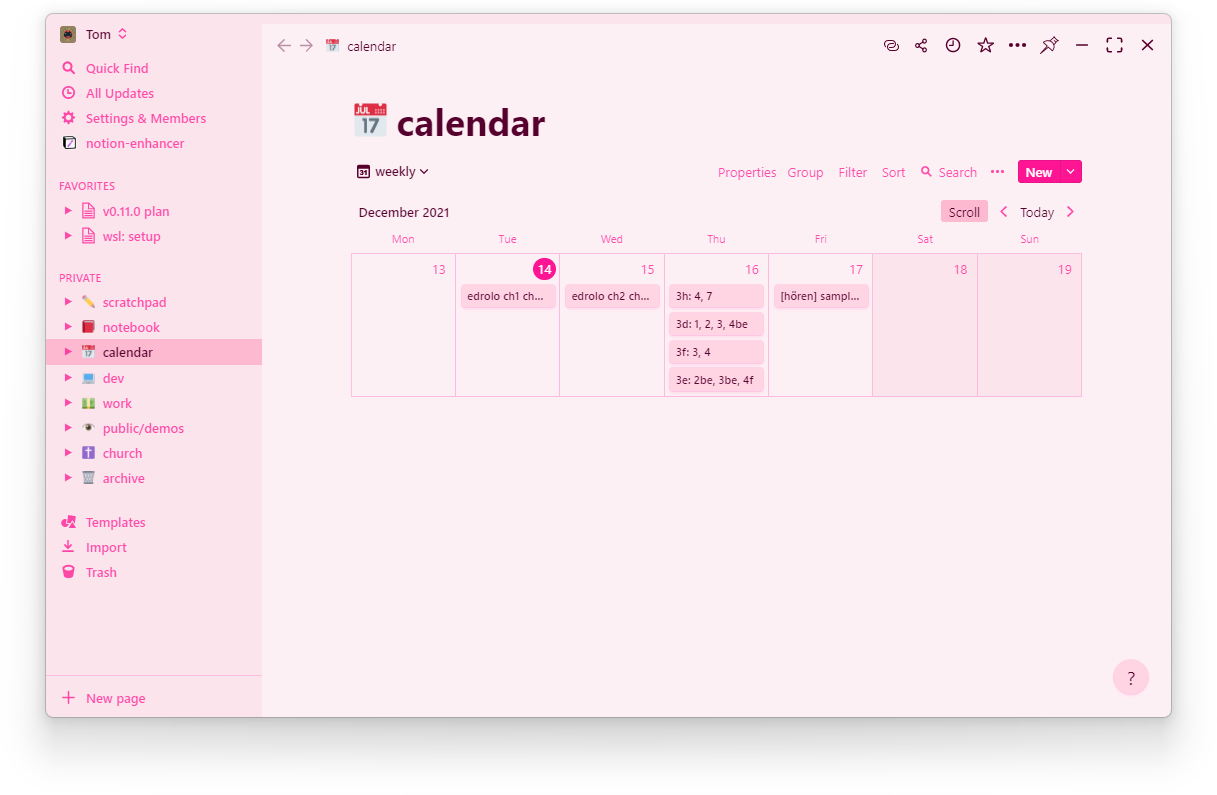

#NOTION ENHANCER GITHUB CODE#

We’re all for it, the more minds working on it, the more progress and amazing use cases! But while most have focused on natural language and code (in the long context arms race), we noticed no one was paying much attention to a field that is inherently made of ultralong sequences: biology. OpenAI stated a goal to reach 1M tokens, while Magic announced they reached 5M tokens for code. Long context models are all the rage these days. ResourcesĪrxiv colab github huggingface (ckpts) Long context for DNA We also explore what new capabilities open up with long context in genomics, including in-context learning with soft prompt tuneable tokens and instruction fine-tuning. HyenaDNA is pretrained on the human reference genome, and sets new SotA on 23 downstream tasks including predicting regulatory elements, chromatin profiles, and species classification.

We're excited to introduce HyenaDNA, a long-range genomic foundation model with context lengths of up to 1 million tokens at single nucleotide resolution! Image generated by Adobe Firefly with prompt, "cute Hyena with DNA sequences".

0 kommentar(er)

0 kommentar(er)